ICDT

In this project, I led a team of 5 to build a bimanual collaboration setup with LLM. This project is for the course 11-851: Talking to Robots .

The hardware setup is as follows. We have two arms with different capabilities. The Franka Research 3 (FR3) on the left has a gripper to grasp objects, whereas the KUKA LBR 7 on the right has a cardboard broom to wipe things around. Both robots are equipped with eye-in-hand cameras so they can see the world. FR3 is equipped with RealSense D435i, whereas KUKA LBR 7 is equipped with RealSense D405. The blue-taped area is where we designated as a “recycling area.” There is also one microphone, the input source we use to “Talk to Robots,” in the recycling area.

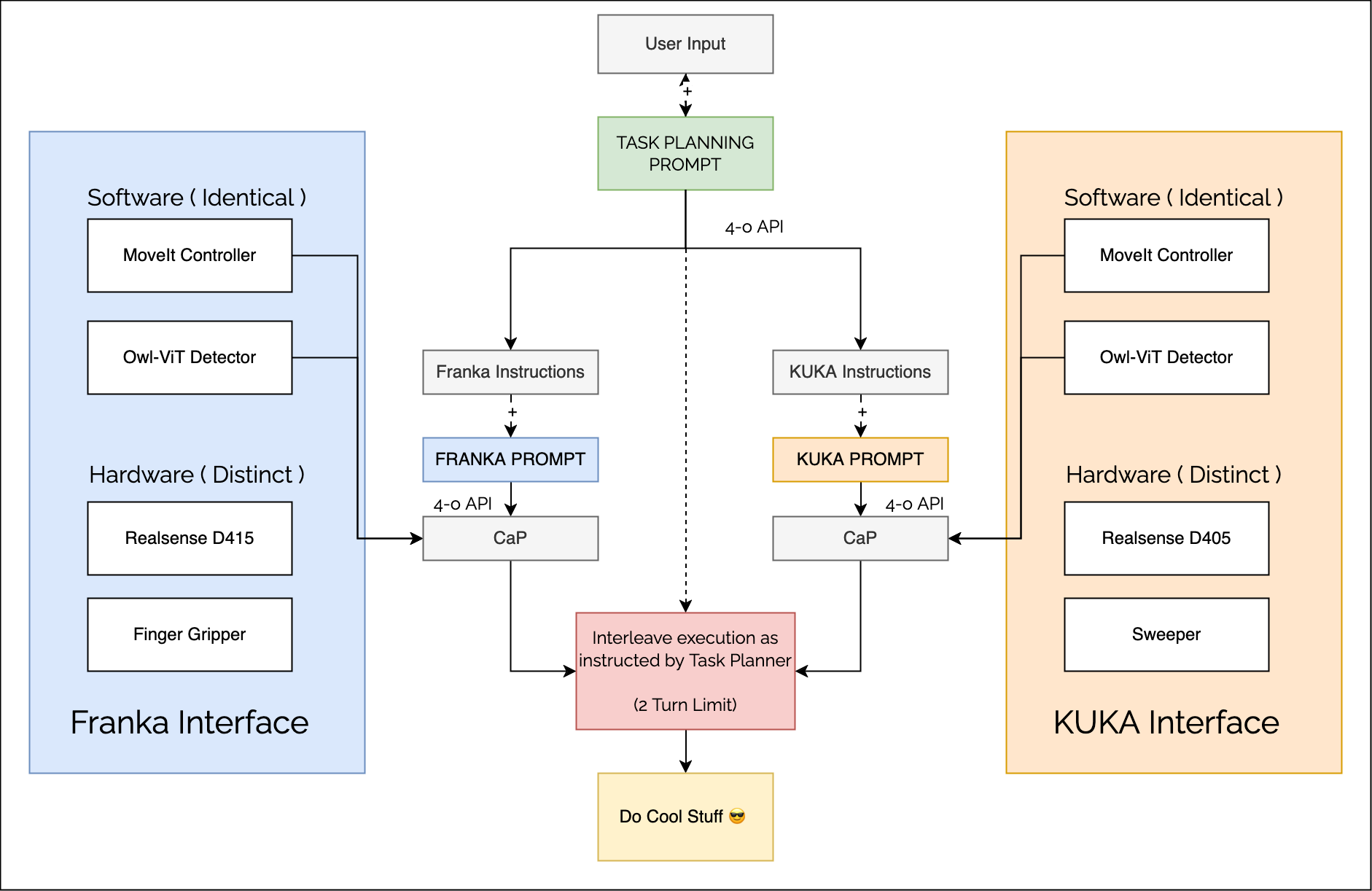

The software stack is as follows. The user input can either be from a terminal text input or the result of speech recognition from Whisper by OpenAI. The input will be concatenated with the task planning prompt, where we describe the overall recycling scenario. Some constraints are regarding the reachability of the arms. For example, FR3 cannot go into the recycling area, and the KUKA cannot cross the midline. Thus, the two robots must cooperate to finish the task.

Each robot also has a prompt to provide some basic skills we coded. For example, FR3 has the low-level skill “grasp,” whereas KUKA has the low-level skill “sweep.” The software stack is designed to be the same, and we are only changing the ROS parameter files to write less code.

The Owl-ViT detector is an open-vocabulary object detection model, where we defined some basic classes like can, plate, block, and napkin. These can help the robot reason the objects in the scene.

The whole system operates on the concept of “code-as-policies.” Thus, aside from providing low-level skills, the entire task-planning Python code is generated by querying ChatGpt-4o.

Single arm demo

Voice prompt: “Pick up the can and place it on top of the napkin.”

Text prompt: “Clean the plate by moving the block somewhere to the left and then place the can on the plate.”

Text prompt: “The table has gotten very dirty. Can you help clean it up?”

It is fascinating to see how LLM can generally solve ambiguity like “somewhere to the left” and reasoning about black forms (probably only detected as blocks) that symbolize the stains on the table.

Bimanual collaboration demo

Text prompt: “Place the can in the recycling area.”

This is exactly the task that we want to achieve.

Text prompt: “A can accidentally went into the recycling area. Can you bring it out of that area and then put it on the plate?”

This is the reverse of the task that we want to achieve! It’s cool to see the robot still understands the goal.

Misc.

For a detailed report, please see the following.